Introduction

Engaging with audiences on social media is an undeniable opportunity for brands to expand their reach and drive performance. By leaning into the participatory culture of social platforms, brands are finding new ways to enter conversations and connect with their audience. But the online forum isn’t without its risks. Brands that overlook the importance of protecting their online touchpoints from hate comments, harassment and spam risk compromising both their community members and brand image. Community protection is the first step to growing an online community because it creates conditions that encourage free participation and foster a sense of belonging.

In our first article, Protecting the Conversation (linked), we identified the growing prevalence of harmful comments and its impact on online brand communities. Now, we’re diving deeper to understand what different types of harmful comments look like, and their direct impact on those engaging with your brand platform. Our aim is to equip marketing and community management teams with the practical knowledge to recognise harmful comments in all their forms, and the confidence to respond effectively.

What are harmful comments?

Harmful comments show up in many forms on social media, but each one carries its own potential to damage brand reputation and put online communities at risk. In fact, 21% of comments left on social media are harmful to brand communities. The comment section is a space frequently used to share opinions, ask questions, or celebrate a brand’s latest post. Instead, potential customers see hateful remarks, trolling, or explicit content that quickly turns curiosity into discomfort, leaving a negative impression of the brand. At Sence, we moderate across eight key categories of harmful comments: hate, harassment, spam/scams, violence, extremist, illicit activity, self-harm and sexual content.

Understanding the full spectrum of harmful comments is crucial for building a safe and engaging environment where your community can grow. Let’s break down what these harmful comments look like across five different classes and what tools marketers have to address them swiftly.

Five classes of harmful comments

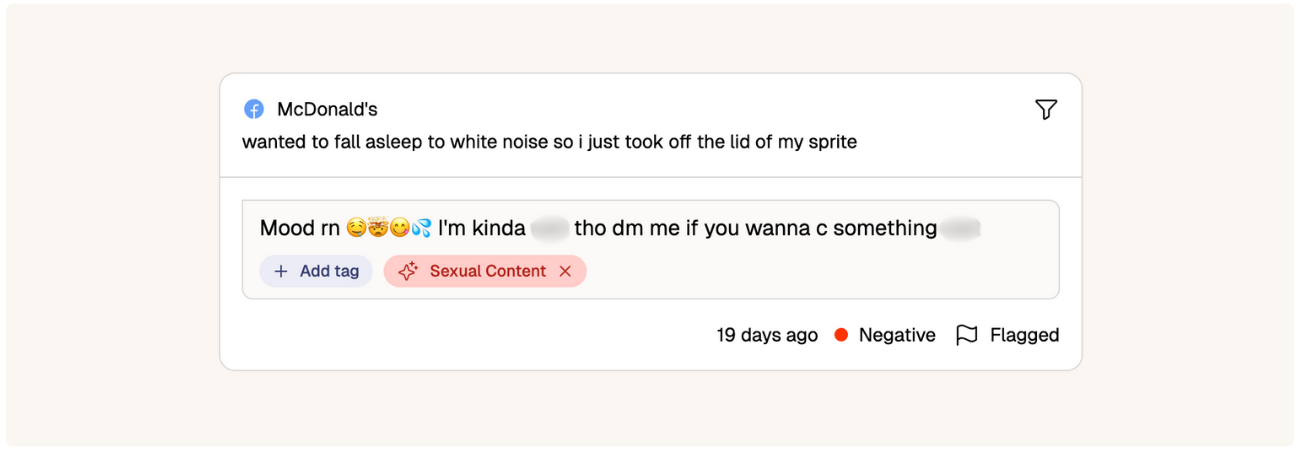

Hate

Promotes hatred, prejudice, or hostility toward individuals or groups, often based on characteristics like race, ethnicity, gender, religion, or nationality. Hate comments can be targeted at individuals or a brand overall and make up 26% of all harmful comments Sence has analysed on social media.

.png)

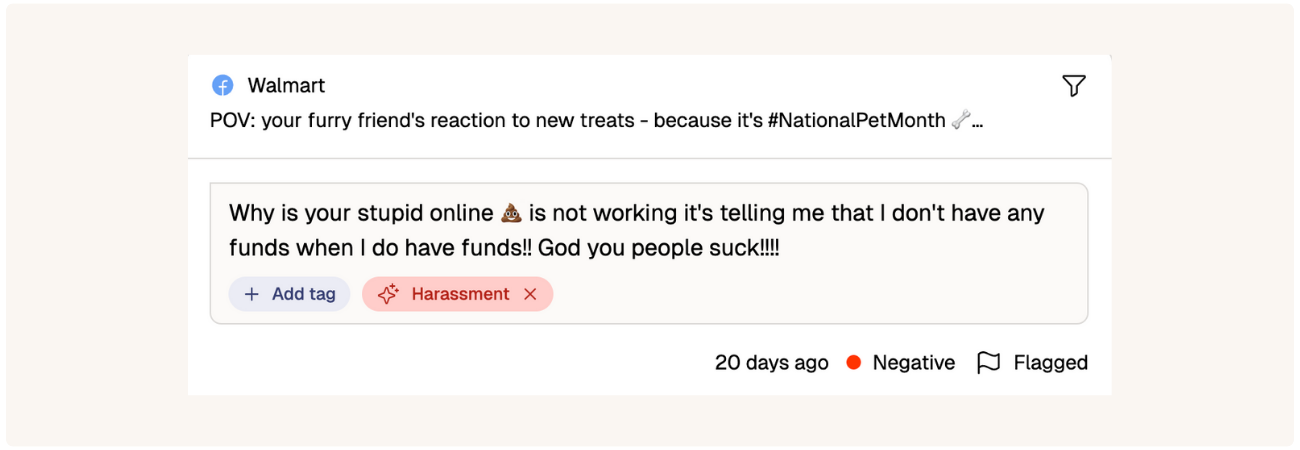

Harassment

Aggressive comments, threats, or repeated targeting that aims to intimidate, shame, or belittle others. Harassment includes behaviors like cyberbullying, name-calling, body shaming, and sustained personal attacks. It’s another common type of harmful content, accounting for 27% of all harmful comments.

Spam and scams

While sometimes dismissed as “low harm,” spam and scams pose real risks to brand credibility and user safety. It consists of unwanted, repetitive, or misleading content typically of a commercial, promotional, or fraudulent nature. This includes phishing links, bot comments, fake giveaways, and counterfeit product ads. While less personal, these harm platform integrity and expose users to financial or data risk. Spam and scams account for 37% of harmful comments analysed by Sence, making it the most common class of harmful content on social media.

Violence

Promotes or glorifies physical harm either directly or indirectly and often includes language that threatens, incites, or encourages violent acts. This can range from explicit threats against individuals, groups, or institutions, to the glorification of violence in ways that can inspire harmful actions. Violence is a severe form of harm, making up 5% of all harmful comments on social media.

Sexual content

This includes pornographic or sexually explicit material, as well as sexual language or solicitation. Such content violates community guidelines and creates an unsafe environment. Although less frequent, sexual content makes up 2% of harmful comments analysed by Sence, its presence immediately leaves a bad impression and breaks down trust with your audience.

A solution that promotes community

As brands engage more deeply with audiences on social media, the challenge of protecting communities from harmful comments becomes increasingly crucial. As shown above, harmful content can look pretty ugly. It exists in many forms and quickly disrupts engagement, damaging a brand’s reputation in the process. The good news is that Sence has created a solution to tackle this challenge head-on.

Sence is built to understand and act on online conversations at scale. At its core, it’s a Conversational Intelligence model that helps teams make sense of what’s happening in comment sections – whether that’s surfacing valuable insights or protecting communities from harmful content. Comment moderation is just one part of what the platform does, but it’s an essential feature for growing an online community.

At Sence, our partnership approach means we create bespoke AI models that are tailored to fit a brand's unique engagement strategy. What’s acceptable in one community may not be in another, which is why Sence allows your team to set the standards. Once the boundaries are set, Sence offers two options: it can automatically moderate content in real-time, or your team can review flagged comments and decide whether to hide them, ensuring full control over your community’s safety and tone. And unlike rigid keyword filters, Sence leverages Conversational Intelligence, capable of processing the nuance behind every comment, tagging tone, intent, and context.

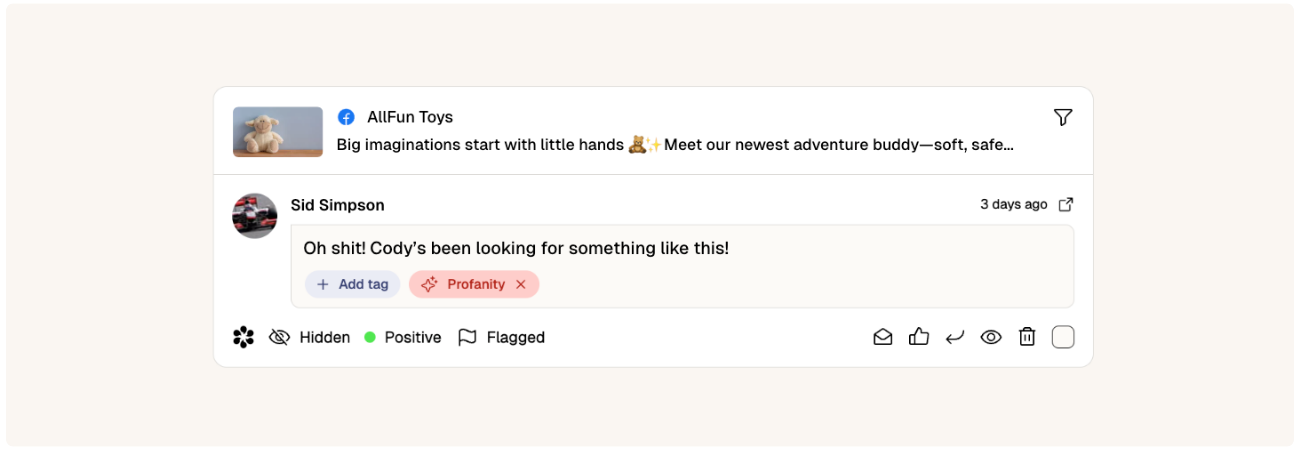

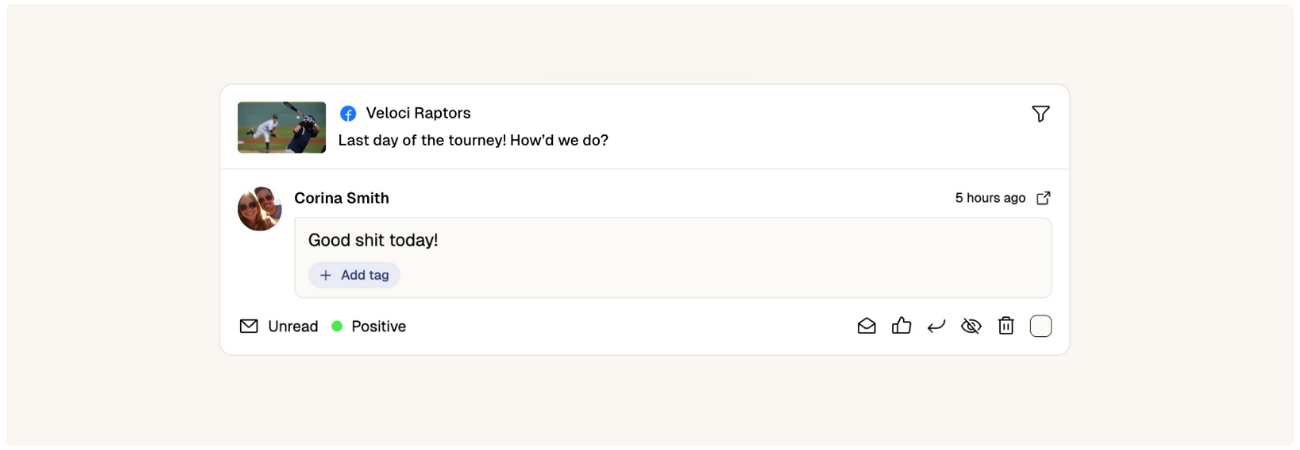

Here’s how that looks in practice:

Example 1: A brand allowing negative comments and mild profanity related to a game or skill. But racial slurs and threats are moderated.

Hidden/flagged ❌

Allowed ✔️

Example 2: A toy brand completely filtering out any profanity regardless whether context is positive or negative.

Hidden/flagged ❌

Example 3: A brand completely filtering out any profanity vs. a brand allowing profanity with positive context

Hidden/flagged ❌

Allowed ✔️

In summary

Harmful comments come in many shapes: hate, harassment, spams/scams, violence, explicit content, and each one chips away at a brand's reputation. Keeping comment sections clean and safe may seem like a daunting task for marketing teams, but that’s where Sence steps in. With bespoke moderation across every class of harmful content, Sence doesn’t just block bad actors, it clears the way for genuine voices to stand out. Whether automatically moderating in real-time or allowing your team to manage flagged content, Sence empowers marketers to protect their community and grow genuine engagement on social platforms.

.png)

.png)